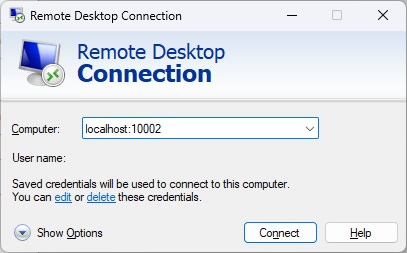

Every once a while, I experience problem to connecting to my Linux servers via Windows Remote Desktop. This is a very annoying issue, because all servers use the same configurations, and some servers throw error while some servers are doing okay. After spending countless of hours, here are my solutions:

- Add delay_ms=2000 to /etc/xrdp/xrdp.ini

- Remove and rebuild the user’s home directory

- Set the color depth of Windows Remote Desktop Client to 24

For the first solution, it is pretty straight forward:

sudo nano /etc/xrdp/xrdp.ini delay_ms=2000 sudo systemctl restart xrdp.service;

For the second solution, I cloned my home directory and delete all other hidden files, e.g.,

sudo su #Make a copy first rsync -avr /home/myuser/ /home/myuser_old/

Then I deleted most of the files such as .vnc/, .Xauthority/, .xsession-errors/. Here are the files I started with:

drwx------ 19 myuser myuser 4096 Apr 9 10:12 . drwxr-xr-x. 5 root root 59 Apr 9 09:26 .. -rw------- 1 myuser myuser 7409 Apr 9 09:26 .bash_history -rw-r--r-- 1 myuser myuser 18 Oct 30 2018 .bash_logout -rw-r--r-- 1 myuser myuser 193 Oct 30 2018 .bash_profile -rw-r--r-- 1 myuser myuser 231 Oct 30 2018 .bashrc drwx------ 2 myuser myuser 25 May 11 2022 .ssh

After I successfully logged in via xRDP, the system generated all of these files:

drwx------ 19 myuser myuser 4096 Apr 9 10:12 . drwxr-xr-x. 5 root root 59 Apr 9 09:26 .. -rw------- 1 myuser myuser 7409 Apr 9 09:26 .bash_history -rw-r--r-- 1 myuser myuser 18 Oct 30 2018 .bash_logout -rw-r--r-- 1 myuser myuser 193 Oct 30 2018 .bash_profile -rw-r--r-- 1 myuser myuser 231 Oct 30 2018 .bashrc drwx------ 16 myuser myuser 4096 Apr 9 10:05 .cache drwxrwxr-x 15 myuser myuser 279 Apr 9 09:29 .config drwx------ 3 myuser myuser 25 Apr 9 09:27 .dbus drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Desktop drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Documents drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Downloads dr-x------ 2 myuser myuser 0 Apr 9 09:27 .gvfs -rw------- 1 myuser myuser 1252 Apr 9 10:05 .ICEauthority drwx------ 3 myuser myuser 19 Apr 9 09:27 .local drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Music drwxrwxr-x 2 myuser myuser 6 Apr 9 09:27 perl5 drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Pictures drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Public drwx------ 2 myuser myuser 25 May 11 2022 .ssh drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Templates drwxr-xr-t 2 myuser myuser 6 Apr 9 09:27 thinclient_drives drwxr-xr-x 2 myuser myuser 6 Apr 9 09:27 Videos drwx------ 2 myuser myuser 316 Apr 9 10:04 .vnc -rw------- 1 myuser myuser 242 Apr 9 10:04 .Xauthority -rw------- 1 myuser myuser 0 Apr 9 10:04 .xsession-errors

That’s it, hope it helps.

Our sponsors: